Impact of online closed-book examinations on medical students’ pediatric exam performance and test anxiety during the coronavirus disease 2019 pandemic

Introduction

Since coronavirus disease 2019 (COVID-19) was declared a pandemic by the World Health Organization (WHO) on March 11, 2020 (1), it has resulted in a huge challenge to education systems, leading to worldwide cancellation of lectures, placements, and exams and the closure of medical schools (2).

Tests are used as an essential and effective assessment tool to promote the retention of knowledge. In the midst of the COVID-19 crisis, end-of-term examinations are challenging when medical students are sent home. It is inappropriate to cancel or suspend end-of-year examinations since this may cause millions of students to feel left in the lurch (3) or even worsen their mental health.

Although alternative methods of assessment via open-book examination (OBE) are emerging, the validity and fairness of remote-access OBE is concerning for final-year examinations during the pandemic (4,5). At Shanghai Medical College of Fudan University, the third-year medical students’ pediatric final examination went ahead as planned and was completed as a closed-book examination (CBE) as usual but from home via an online platform.

Little is known about the impact of end-of-term online CBEs on medical students’ exam performance, test anxiety and possible contributing factors during the COVID-19 pandemic. Evidence-based experience of online CBEs is helpful to medical teachers to optimize the setup of online CBEs during this pandemic. We conducted this study with the aims of bridging this gap, identifying underlying factors that contribute to the impact, and providing evidence to help alleviate the negative impact of online examinations on exam performance and test anxiety. We present the following article in accordance with the SURGE reporting checklist (available at http://dx.doi.org/10.21037/pm-20-80).

Methods

Ethical approval

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013) and was approved by the Research Ethics Committee of the Children’s Hospital of Fudan University (NO.:2020-300). Informed consent was obtained from all the participants.

Subject selection

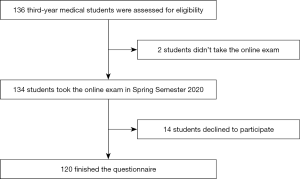

A prospective study was conducted among all third-year undergraduate medical students from Shanghai Medical College of Fudan University who had a pediatric course between February 24 and July 10, 2020. Students who did not take the online final examinations and those who did not consent to participate in the study were excluded from the analysis (Figure 1). None of the enrolled students had experience with online CBE before the final exam. A total of 120 pediatric medical students were included in this study, representing 89.6% of all targeted medical students. The response rate of the survey was 100%.

Data collection

An online electronic questionnaire was sent to the 120 study participants after the final exam on July 10, 2020. This survey collected information including gender, specialty, satisfaction with the online examination platform, preference for the online examination for classroom examination, how the online examination impacted the participants’ exam performance, and anxiety level at the time of the online examination using a 10-point self-assessment scale (anxiety levels were proportional to the points from ‘0’ to ‘10’, with ‘0’ representing no change in anxiety and 10 representing the highest degree of change in anxiety) by comparison with the participants’ experience of classroom examinations before the COVID-19 pandemic.

The medical students were also asked to score their willingness to participate in future online CBEs from –100 to 100. Zero to 100 describes a preference for online examination, and zero to –100 describes a preference for classroom examination. The higher the absolute value, the greater the respondent’s preference for online or classroom examination.

According to the curriculum and teaching content of pediatrics, the final pediatric examination has a total score of 100 points and must be finished within 120 minutes. The content of the examination includes 40 multiple-choice questions (MCQs) accounting for 40 points that assess students’ ability to integrate clinical reasoning and decision-making skills and four essay questions that assess students' comprehensive abilities, such as case analysis and clinical thinking, with a total score of 60 points. Each question has a unified score point.

The scores for the final examination of the pediatric course in the spring semesters of 2020 and 2019 were collected at the same time and represented performance in online and classroom examinations, respectively.

For the online examination, students were required to set up two electronic devices at home. The first one was used to access the exam platform, and the second one was used to monitor the home environment. A simulation test two days prior to the formal examination was arranged to minimize network- or technical-related anxiety, which could result in an adverse impact on exam performance. Students were required to access the online examination and invigilator platforms to become familiar with both of them. They also became familiar with the functions of the platform. For the MCQ, they had to choose the right answer on the exam platform, while for the essay questions, they could either type the answer on the device or use the device camera to take photos of the written answer sheet and upload it to the platform. Their feedback on these two platforms was collected after they completed the final exam. Seven teachers simultaneously supervised the exam online to ensure fairness.

Statistical analysis

Continuous variables are expressed as the means ± standard deviations. Categorical variables are presented as percentages. Differences in the medical students’ final scores via online or classroom examination and their anxiety scores according to gender and age were evaluated using t-tests. Univariate analyses of categorical data were conducted with chi-square and Fisher’s exact tests. One-way ANOVA was applied to age and the scores of different impacts of the online examination. Differences were considered statistically significant with a two-tailed P value <0.05. The analysis was performed using IBM SPSS 25.0.

Results

Participants and final exam scores

Table 1 shows the baseline characteristics of the enrolled participants. A total of 120 and 127 medical students with a mean age of 21 years took the online examination in 2020 and classroom examination in 2019, respectively. The participants were from three different specialties: preclinical medicine, preventive medicine and pharmacy. The percentage of male participants (37.5%) was lower than that of female participants (62.5%). Overall, there was no significant difference in age (P=0.373), gender (P=0.239) or total scores (P=0.216) between the online and classroom examination groups.

Table 1

| Characteristics | Online examination§, (N=120) | Classroom examination¶, (N=127) | P value |

|---|---|---|---|

| Age, year | 21.04±0.272 | 21.02±0.177 | 0.373 |

| Sex (male), no./total (%)‡ | 45/120 (37.5) | 57/127 (44.9) | 0.239 |

| Preclinical Medicine | 7/13 (53.8) | 21/33 (63.6) | 0.540 |

| Preventive Medicine | 35/99 (35.4) | 33/80 (41.3) | 0.419 |

| Pharmacy | 3/8 (37.5) | 3/14 (21.4) | 0.624 |

| Final exam scores† | 67.7±12.9 | 65.9±9.5 | 0.216 |

Data presented as no./total (%) or means ± SD. ‡, there was no significant difference in age, gender and total scores between online and classroom exam groups; §, students took the pediatric course in the spring semester of 2020 participated in the online final examination; ¶, students took the pediatric course in the spring semester of 2019 participated in classroom examination; †, this equals scores of MCQ plus Essay questions.

Impact of online examination on exam performance of medical students

Table 2 shows the distribution of age, specialty, gender, satisfaction and final exam scores by the nature of different impacts (no, positive and negative impact) of the online examination on students’ exam performance. There was no significant difference in age (P=0.474), specialty (P=0.574) or final exam score (P=0.757). There was a significant difference in the sex group (P=0.011). The percentage of “no impact” was similar in both the male and female groups, 44.4% and 44.0%, respectively. However, more female students than male students thought the online examination had a negative impact on their exam performance (48.0% and 28.9%, respectively; P=0.003). Moreover, students in the negative impact group were least satisfied with the online exam platform.

Table 2

| Group | No impact (N=53) | Positive (N=18) | Negative (N=49) | P-value |

|---|---|---|---|---|

| Age, year | 21.0±0.1 | 21.0±0.1 | 21.1±0.4 | 0.474 |

| Specialties, no./total (%) | ||||

| Preclinical medicine | 7/13 (53.8) | 2/13 (15.4) | 4/13 (30.8) | |

| Preventive medicine | 43/99 (43.4) | 16/99 (16.2) | 40/99 (40.4) | 0.574 |

| Pharmacy | 3/8 (37.5) | 0/8 (0) | 5/8 (62.5) | |

| Scores | ||||

| Total scores | 67.3±15.8 | 69.8±10.5 | 67.4±10.0 | 0.757 |

| MCQ | 22.7±5.8 | 23.8±5.3 | 23.1±4.6 | 0.766 |

| Assay questions | 44.5±11.7 | 46.0±7.1 | 44.3±7.3 | 0.800 |

| Gender, no./total (%) | ||||

| Male | 20/45 (44.4) | 12/45 (26.7) | 13/45 (28.9) | 0.011 |

| Female* | 33/75 (44.0) | 6/75 (8.0) | 36/75 (48.0) | |

| Satisfaction rate with the online platform—no./total (%) | 48 (90.6) | 18 (100) | 39 (79.6) | 0.054 |

Data presented as no./total (%) or means ± SD. There were no significant differences (P≥0.05) between the two groups for baseline variables. *, the percentage of the female students who thought the online examination had a negative impact on the final exam was significantly higher than that of the male students (P=0.003).

Impact of online examination on medical students’ test anxiety

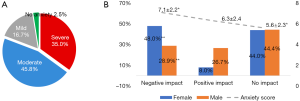

By comparing the experience of classroom examinations before the pandemic, medical students were asked to score their anxiety level on a 10-point self-assessment scale. The participants’ mean anxiety score was 6.28±2.3. The anxiety scores of female students were similar to those of male students, 6.49±2.56 and 5.91±2.7, respectively (P=0.187). The mean anxiety scores for preclinical medicine, preventive medicine and pharmacy were 6.23±1.7, 6.24±2.4, and 6.75±2.3, respectively. The mean anxiety scores for male and female participants were 5.9±2.7 and 6.5±2.1, respectively. However, no significant differences were found among specialties (P=0.839) and genders (P=0.187).

Figure 2A illustrates the distribution of the degree of changes in the anxiety levels of the 120 medical students. Only 3 students (2.5%) did not feel anxious, 20 (16.7%) scored less than 3, 55 (45.8%) scored between 4 and 7, and 42 (35.0%) scored more than 8. Almost 97.5% (117/120) of the medical students experienced varying degrees of test anxiety.

Figure 2B presents the anxiety scores in different impact groups for the online CBE. The mean anxiety score ranged from 7.1±2.2 in the ‘negative impact’ group to 5.6±2.3 in the ‘no impact’ group. The students who thought the online examination had a negative impact on exam performance had significantly higher mean anxiety scores than those who did not (P=0.011). Moreover, the percentage of female students who experienced a negative impact of the online examination was significantly higher than that of male students (P=0.003).

Correlation of test anxiety and exam performance

Figure 3A illustrates the distribution of gender, the impact of the online examination, and the total final exam scores by different degrees of anxiety. Students in the no, mild, moderate and severe anxiety groups had similar final exam scores, 65.6±17.6, 68.9±11.6, 67.0±15.6 and 68.1±9.0, respectively. There was no significant difference in the final exam scores among the different degrees of anxiety, genders and impact groups (F=0.148, P=0.931).

Figure 3B illustrates the medical students’ preference for future online examinations by the distribution of gender, the impact of online examinations, the degree of severity and the impact of online examination on test performance. A total of 68.0% (51/75) of female students and 48.9% (22/45) of male students preferred classroom exams. Female students were more willing to have a classroom exam than male students, with preference scores of –29.3±65.4 and 1.24±72.7, respectively (P=0.019).

Students in the negative, no and positive impact groups had significantly different preference scores, –51.04±60.4, –6.7±64.4 and 39.8±61.5, respectively (P<0.001).

Forty-one out of 49 students (83.7%) who thought online CBEs had a negative impact on their exam performance preferred classroom exams in the future, regardless of gender, satisfaction or dissatisfaction with the online platform or degree of test anxiety. The main reasons for this result were that 58.5% (24/41) of the students felt that they had not fully adapted to the form of online examinations and 41.5% (17/41) were worried about the unfairness of online examinations and would prefer online OBEs.

Thirteen out of 18 students (72.2%) who thought the online examination had a positive impact on their exam performance preferred online examinations in the future, regardless of gender, satisfaction or dissatisfaction with the online platform or degree of test anxiety. Among the 53 students who thought the online examination had no impact on their exam performance, 25 (47.2%) preferred online examinations and 28 (52.8%) preferred classroom exams.

Discussion

Tests were found to produce better memory than the lack of tests (6,7). Students’ competence and course credit scores can also be confirmed by a standardized test or set of tests (8). Unfortunately, medical education is currently undergoing a significant change. The transformation of medical education during the pandemic is difficult but essential (8). Questions were raised regarding how examinations would take place during this pandemic (9). Currently, examinations have transitioned to online settings worldwide (10). While there is an increasing requirement for remote-access OBEs, the fairness and reliability of this assessment format as a comparable educational performance measure is concerning (5). There are also concerns about how medical students’ mental health would fare after months of online content and revision (11).

Under these circumstances, the third-year medical students’ pediatric final examination went ahead as planned and was completed as a CBE as usual but from home via an online platform. The aim of our study was to identify the impact of CBE to document and analyze the effects of current changes to help educators learn and apply new principles and practices in the future (10).

In medical education, especially high-stakes testing, there is an emphasis on MCQs that test the application of knowledge rather than rote memorizing (12). Over the past 10 years, the end-of-term pediatric CBEs for third-year medical students at Shanghai College of Fudan University have remained stable, including MCQs and essay questions that assess students’ comprehensive abilities, such as case analysis and clinical thinking. Thus, this exam can be accepted as a comparable exam performance measure in a pediatric curriculum. The mean scores in 2020 were comparable to those in 2019, indicating that students’ exam performance was not significantly affected by the change from classroom to online examination.

According to medical students, the successful delivery of online examinations is dependent on satisfactory internet connections and the availability of IT support during exams5. To minimize technical issue-related stress and anxiety during the formal final examination, we organized an online simulation test two days before the formal test. This simulation test helped to minimize technical issue-related stress and anxiety and contributed to 59.6% (71/120) of the students perceiving a positive or no impact of the CBE on their exam performance.

It is an unusual feeling to take an important final examination from home. It is common for students to experience stress or anxiety about assessment, which might be counterproductive to learning and could affect mental health (13). It has been suggested that multiple tests should be integrated into teaching activities (14). During our 16-week curriculum of pediatric courses for third-year undergraduate medical students, online self-assessed OBE was applied once a week to assist knowledge retention, familiarize students with the online teaching platform, and help them become accustomed to online examinations. Nevertheless, in the present study, the majority of students (97.5%) felt various degrees of anxiety due to the stress of the online CBE. With respect to the mean anxiety score, no significant difference was identified among genders (P=0.187). However, female students tended to feel a more negative impact of the online CBE on their exam performance (P=0.003) and were more willing to take classroom CBEs than online OBEs than male students (P=0.019). The major contributing factors for students to feel a negative impact of online CBE on exam performance included poor adaptation to the online exam platform (58.5%) and concerns about the unfairness of the online examinations (41.5%). It is not unusual for female students to record higher rates of anxiety than male students (15).

Despite female students’ self-reported negative impact of CBE on exam performance, their actual exam performance did not differ significantly from that of male students. However, special attention should be paid to female students’ difficulty adapting to the online examination. Encouraging individual-needs-based multiple simulation tests prior to formal exams might be an option. Moreover, sufficient instruction about the exam platform and assurance of fairness during the online examination are beneficial to alleviate the negative impact of online examinations on exam performance and test anxiety.

Limitations

The findings of this study should be interpreted in light of several limitations. First, all the participants were third-year medical students from Fudan University in Shanghai; therefore, they may not be representative of national responses. Future research should include other university populations of medical students. Second, although there was a high response rate in this study, we were unable to determine the characteristics of students who declined to participate, which could result in selection bias. Third, the self-report survey may lead to response bias, and its reliability and validity were not tested. Finally, although as of June 10 the COVID-19 pandemic is under control in China with only 10 new confirmed cases reported within 24 hours (16), the impact of this pandemic on test anxiety and poor online test performance should be taken into account. Follow-up studies are required to obtain more comprehensive and objective results by using a valid scale for test anxiety.

Conclusions

Test anxiety was common in students who lacked experience with online CBE. Although the online examination did not ultimately impact medical students’ final exam scores, special attention should be paid to female students who may have difficulty adapting to the online examination. Moreover, sufficient instruction about the exam platform, individual-needs-based simulation tests prior to formal exams, and assurance of fairness during the online examination are beneficial to alleviate the negative impact of online examinations on exam performance and test anxiety. Future studies are warranted to confirm these findings.

Acknowledgments

We would like to thank all the medical students who participated in this study and Shanghai College of Fudan University for their cooperation and kind help.

Funding: This study was supported by grant of Fudan University Shanghai Medical College 2020 humanities medicine and curriculum ideological and political project.

Footnote

Reporting Checklist: The authors have completed the SURGE reporting checklist. Available at http://dx.doi.org/10.21037/pm-20-80

Data Sharing Statement: Available at http://dx.doi.org/10.21037/pm-20-80

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/pm-20-80). Dr. WZ serves as an unpaid Executive Editors-in-Chief of Pediatric Medicine. The other authors have no conflicts of interest to declare.

Ethical Statement: the authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013) and was approved by the Research Ethics Committee of the Children’s Hospital of Fudan University (NO.:2020-300). Informed consent was obtained from all the participants.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- WHO. WHO Director-General's opening remarks at the media briefing on COVID-19 - 11 March 2020. Available online: https://www.who.int/director-general/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19---11-march-2020

- Miller DG, Pierson L, Doernberg S. The Role of Medical Students During the COVID-19 Pandemic. Ann Intern Med 2020;173:145-6. [Crossref] [PubMed]

- Daniel SJ. Education and the COVID-19 pandemic. Prospects (Paris) 2020. [Epub ahead of print].

- Durning SJ, Dong T, Ratcliffe T, et al. Comparing open-book and closed-book examinations: a systematic review. Acad Med 2016;91:583-99. [Crossref] [PubMed]

- Pace EJ, Khera GK. The equity of remote-access open-book examinations during Covid-19 and beyond: Medical students' perspective. Med Teach 2020;42:1314. [Crossref] [PubMed]

- Larsen DP, Butler AC, Roediger HL 3rd. Test‐enhanced learning in medical education. Med Educ 2008;42:959-66. [Crossref] [PubMed]

- Roediger HL 3rd, Karpicke JD. The Power of Testing Memory: Basic Research and Implications for Educational Practice. Perspect Psychol Sci 2006;1:181-210. [Crossref] [PubMed]

- Emanuel EJ. The Inevitable Reimagining of Medical Education. JAMA 2020;323:1127-8. [Crossref] [PubMed]

- Jamil Z, Fatima SS, Saeed AA. Preclinical medical students' perspective on technology enhanced assessment for learning. J Pak Med Assoc 2018;68:898-903. [PubMed]

- Rose S. Medical Student Education in the Time of COVID-19. JAMA 2020;323:2131-2. [Crossref] [PubMed]

- Mahase E. Covid-19: Mental health consequences of pandemic need urgent research, paper advises. BMJ 2020;369:m1515. [Crossref] [PubMed]

- Case SM, Swanson DB. Constructing Written Test Questions for the Basic and Clinical Sciences. 3rd ed. Philadelphia, PA: National Board of Medical Examiners, 2002.

- McLachlan JC. The relationship between assessment and learning. Med Educ 2006;40:716-7. [Crossref] [PubMed]

- Wood T. Assessment not only drives learning, it may also help learning. Med Educ 2009;43:5-6. [Crossref] [PubMed]

- Thiemann P, Brimicombe J, Benson J, et al. When investigating depression and anxiety in undergraduate medical students timing of assessment is an important factor - a multicentre cross-sectional study. BMC Med Educ 2020;20:125. [Crossref] [PubMed]

- National Health Commission of the People’s Republic of China. The latest situation of COVID-19 pandemic as of 24: 00 on June 10. 2020. Available online: http://www.nhc.gov.cn/xcs/yqtb/202006/d89974801e894128b26f6ecc85481334.shtml

Cite this article as: Wu J, Zhang R, Qiu W, Shen J, Ma J, Chai Y, Wu H, Hu L, Zhou W. Impact of online closed-book examinations on medical students’ pediatric exam performance and test anxiety during the coronavirus disease 2019 pandemic. Pediatr Med 2021;4:1.