Utilization of artificial intelligence in echocardiographic imaging for congenital heart disease: a narrative review

Introduction

Background

Congenital heart disease (CHD) refers to congenital anomalies of the heart and great vessels that occur during embryonic development, with a prevalence of 6 to 12 cases per 1,000 live births (1). CHD is the most common birth defect among neonates and is one of the leading causes of death in newborns and children, with an estimated mortality rate of 8 per 1,000 live births (2,3). Various types of CHD include ventricular septal defect (VSD), atrial septal defect (ASD), patent ductus arteriosus (PDA), pulmonary stenosis (PS), tetralogy of Fallot (TOF), transposition of the great arteries (TGA), coarctation of the aorta (CoA), and hypoplastic left heart syndrome (HLHS), etc., which may also present in combination as complex CHD (4,5). Early detection and diagnosis are crucial for improving CHD prognosis and reducing natural mortality rates (6,7). Auxiliary examinations such as echocardiography, cardiac magnetic resonance (CMR), cardiac computed tomography (CT), and cardiovascular angiography provide diagnostic and therapeutic guidance for CHD patients (8,9). As a non-invasive imaging modality, echocardiography offers rapid and direct visualization of cardiac structure and function, as well as hemodynamic information, making it a primary method for screening and diagnosing CHD (9,10). Additionally, echocardiographic results are among the key indications for formulating surgical or interventional treatment plans and evaluating treatment efficacy (11,12). The complex anatomical structures of the heart and the standard echocardiographic view acquisition process necessitate reliance on the expertise and experience of echocardiographers for accurate operation and interpretation. Consequently, the utilization of pediatric echocardiographic imaging technology may be limited in healthcare facilities with fewer resources. With the advancement of ultrasound imaging technology, the generation of massive volumes of image data has increased the demand for image processing and analysis capabilities, thereby increasing the clinical workload (13).

Recent rapid developments in artificial intelligence (AI) have introduced new and powerful computational algorithms. The application of AI in medical imaging has progressed rapidly, with numerous high-performing models and algorithms showcasing the substantial potential of AI to strengthen the evaluation and diagnosis of CHD through echocardiography. However, a review of the existing literature highlights several limitations in the current summaries of AI applications in CHD. These limitations include: (I) an emphasis primarily on the prenatal diagnosis of CHD; (II) discussions that address multiple imaging modalities but provide insufficient focus on the specific role of echocardiography; and (III) a lack of attention to critical aspects such as cardiac function measurement and image quality control, both of which are essential for imaging-based CHD diagnosis. Notably, no comprehensive review has yet integrated the diverse applications of AI in echocardiography for both prenatal and postnatal assessments of CHD. This review aims to address these gaps by providing an in-depth evaluation of AI’s multifaceted applications in echocardiography, including image recognition and segmentation, intelligent auxiliary diagnosis, cardiac function measurement, image quality control, and multimodal AI integration.

Overview of AI

AI describes computer programs that can perform tasks requiring human like intelligence, including pattern recognition, language comprehension, and problem-solving (14). In the medical field, AI can autonomously make decisions based on data such as patient history, laboratory results, and medical imaging. AI is particularly beneficial in medical imaging, where its ability to process vast amounts of data and detect subtle patterns can assist in diagnosis and treatment planning.

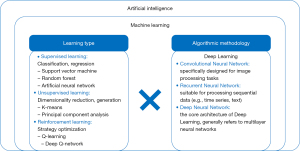

Machine learning (ML), a critical branch of AI, enables systems to learn from data and create automated clinical decision support systems without being explicitly programmed (14,15) (Figure 1). ML is categorized into three learning types: supervised learning, unsupervised learning, and reinforcement learning (16) (Figure 1). In supervised learning, algorithms use labeled datasets to predict outcomes, making it ideal for tasks like regression and classification (15,17,18). Key algorithms in supervised learning include artificial neural networks (ANN), support vector machines (SVM), and decision trees (15). ANN and SVM are widely used due to their ability to handle complex, high-dimensional data with high accuracy. Inspired by the organization of the human brain, ANN models consist of nodes called neurons that are arranged in a network layout and be in the order of hundreds to millions configured in multiple layers (depth) (19). Unsupervised learning identifies hidden patterns in unlabeled data through clustering and association rule learning (15,17,20). Reinforcement learning combines aspects of both supervised and unsupervised learning, optimizing models through trial and error to maximize performance (15). Deep learning (DL), a branch of ML, utilizes multi-layered neural networks, such as recurrent neural networks (RNN), convolutional neural networks (CNN), and deep neural networks (DNN), to simulate brain function and make predictions based on complex data (13,15,16,21) (Figure 1).

Cardiovascular imaging is critical for the diagnosis and treatment of cardiovascular diseases. Traditional analysis of medical images often relies heavily on the expertise of clinicians, which can lead to misdiagnoses, particularly in settings with limited resources. Even in well-equipped settings, clinicians may encounter difficulties in accurately interpreting large volumes of imaging data. AI in cardiovascular medicine has the potential to address these challenges by analyzing imaging data, detecting subtle abnormalities, performing image segmentation, and automating interpretation (16,22,23). Furthermore, AI can help identify new disease phenotypes, improving diagnostic accuracy. By automating routine tasks, AI alleviates the clinician’s workload, allowing for faster and more accurate diagnoses, ultimately improving patient care and safety. We present this article in accordance with the Narrative Review reporting checklist (available at https://pm.amegroups.com/article/view/10.21037/pm-24-72/rc).

Methods

We searched PubMed, China National Knowledge Infrastructure, and Wanfang using key search words including artificial intelligence, machine learning, deep learning, convolutional neural networks, echocardiography, echocardiogram, fetal echocardiography, fetal ultrasound, transthoracic echocardiography and congenital heart disease (Figure 2). All peer-reviewed articles, including original articles, case reports, meta-analyses, reviews and systematic reviews, published in both Chinese and English, were analyzed. Studies describing the use of AI in echocardiographic images of CHD were selected. Some of these articles cited references that were relevant to our study but did not appear in the original search; these references were also included in the analysis. These articles compiled a substantial body of literature relevant to the understanding of AI in ultrasonic diagnosis of CHD, including its advantages and limitations, which were used to construct this narrative review. Studies unrelated to the search terms were excluded. A total of 108 articles were selected for inclusion (Table 1).

Table 1

| Items | Specification |

|---|---|

| Date of search | January 1, 2024 to September 1, 2024 |

| Resources searched | PubMed, China National Knowledge Infrastructure, Wanfang |

| Keywords used for search | Artificial intelligence, machine learning, deep learning, convolutional neural networks, echocardiography, echocardiogram, fetal echocardiography, fetal ultrasound, transthoracic echocardiography, and congenital heart disease |

| Timeframe | Literature published until end of search date |

| Inclusion and exclusion criteria | Inclusion: original articles, case reports, meta-analyses, reviews and systematic reviews pertaining to the application of the artificial intelligence in echocardiography and relevant citations in chosen reference; language restrictions: English, Chinese |

| Exclusion: articles related to obstetric ultrasound; articles not pertaining to artificial intelligence, echocardiography, fetal echocardiography, or congenital heart disease | |

| Selection process | The first authors reviewed all retrieved articles independently. All authors participated in the final selection of literature suitable for the review |

Discussion

Application of AI in echocardiography

CHD is the leading cause of congenital malformation-related mortality in infants and young children (24). Early screening, primarily via fetal echocardiography, can reduce mortality and alleviate associated burdens (25-28). Transthoracic echocardiography (TTE) is used for cardiac assessment in CHD patients, with two-dimensional (2D) echocardiography providing cross-sectional views and three-dimensional (3D) echocardiography offering spatial visualization of complex abnormalities (29-33).

High-quality imaging is challenging due to fetal movement, small cardiac structures, and imaging depth, and is affected by artifacts (34,35). AI technology may assist in improving diagnostic accuracy and overcoming these challenges (36,37).

Image recognition and segmentation

Fetal echocardiography

Bridge et al. (38) proposed a framework for tracking the key variables that describe the content of each frame of freehand 2D ultrasound scanning videos of the healthy fetal heart. Focusing on three specific views—the apical four-chamber (A4C) view, the left ventricular outflow tract (LVOT) view, and the three-vessel view (3VV)—the study employed random forests to predict the visibility, location, and orientation of the fetal heart in the image, as well as the viewing plane label from each frame. Additionally, a particle filtering framework was incorporated to reduce the error rate in feature classification. This work represents an important initial step towards developing tools for detecting CHD in abnormal cases. Huang et al. (39) conducted a similar study, but their approach utilized a CNN system, focusing on spatio-temporal acoustic patterns in ultrasound videos. This system is capable of processing ultrasound video data to descript the fetal heart and to identify standard viewing planes in one step. Yu et al. (40) employed a dynamic CNN based on multi-scale information and fine-tuning to segment the left ventricle (LV) from fetal ultrasound images, using the mitral valve as a base point to separate the left atrium (LA) from the LV. The dynamic fine-tuning approach enabled the CNN model to adapt to variations in objects. This model’s segmentation capabilities hold potential for quantitative analysis of fetal LV function. However, the model only focused on a small region around the LV in long-axis fetal echocardiography sequences.

Clinicians, in practice, require quantitative analysis of fetal cardiac function by integrating the LA, LV, right atrium (RA), right ventricle (RV), epicardium, descending aorta (DAO), and thorax. Xu et al. (41) addressed this limitation by proposing an end-to-end DW-Net capable of accurately and automatically segmenting these seven key anatomical structures in the A4C view. Despite challenges such as artifacts, category confusion, and missing boundaries in ultrasound images, DW-Net effectively resolved critical segmentation issues and demonstrated improved robustness to variations in image quality, achieving an average accuracy of 80.875% for potential structures, indicating its potential for broad clinical applicability. Dozen et al. (42) introduced a novel segmentation method termed Cropping-Segmentation-Calibration (CSC) for segmenting the interventricular septum (IVS) in fetal cardiac ultrasound videos of the A4C view. This approach leverages cropped image information around the IVS and the time-series information from videos to calibrate the output of U-Net—a combination of convolution, deconvolution, and skip-connection, frequently used in medical image segmentation (43). Qiao et al. (44) proposed an Intelligent Feature Learning Detection System (FLDS) for the fetal A4C view to detect the four chambers (LV, LA, RV, and RA). A multistage residual hybrid attention module (MRHAM) was incorporated into the FLDS to learn robust and strong features of the four chambers from the images. FLDS can complete detection tasks in 2 seconds, significantly faster than experienced cardiologists (2 minutes), and its accuracy surpasses that of mid-level cardiologists (91% vs. 87%). FLDS can be integrated with echocardiography instruments, using the fetal A4C view as input and providing inference results that are then transmitted to the clinician’s computer, displaying the positions of the fetal four chambers. This system holds significant potential for clinical diagnosis, offering valuable assistance and reference to clinicians. Lu et al. (45) proposed a YOLOX-based deep instance segmentation neural network, termed IS-YOLOX, marking the first study on instance segmentation on 13 types of anatomical structures in the fetal A4C view. These structures include the LV, RV, LA, RA, interatrial septum (IAS), IVS, rib, DAO, spine, left ventricular wall (LVW), right ventricular wall (RVW), left lung, and right lung.

Although the A4C view is crucial for the diagnosis of CHD, a more accurate diagnosis requires the integration of multiple views for comprehensive analysis (46,47). Stoean et al. (48) advanced the field by expanding the number of views in automated image recognition based on fetal echocardiography to four distinct planes: A4C, 3VV, LVOT, and right ventricular outflow tract (RVOT). They applied CNN to color Doppler fetal echocardiographic images, distinguishing four critical structures across these planes: the atrioventricular blood flow in the A4C view, the aorta in the LVOT view, the large vessel crossing in the RVOT view, and the arch in the 3VV, achieving an accuracy rate of 95.5%. Additionally, it is noteworthy that while the guidelines recommend fetal echocardiography during mid-gestation (18–22 weeks), the ultrasound images used for training and testing in Stoean et al.’s study were from early gestation (12–14 weeks). The promising performance of their DL framework could potentially enhance fetal CHD screening and diagnostic efforts during early gestation. Wu et al. (49) proposed a fetal heart standard recognition model based on the YOLOV5 depth convolution neural network (U-Y-net), which identifies five fetal cardiac planes—A4C, 3VV, LVOT, RVOT and the three-vessel trachea (3VT) view—during the gestational period of 20–24 weeks, with an accuracy rate of 94.3%. The precision and sensitivity of U-Y-net surpass those of entry-level practitioners and are comparable to those of mid-level practitioners. U-Y-net assists clinicians by providing standard view profiles, identifying key anatomical structures, and screening for potential CHD.

These studies demonstrate the efficacy of AI in the image recognition and segmentation of fetal echocardiography, showcasing superior performance that benefits both novice practitioners and experienced clinicians by providing valuable reference points.

TTE

Madani et al. (50) proposed a model based on various video and static image view classification tasks, built on a supervised learning CNN framework, for the automatic classification of 15 different standard echocardiographic views without the need for prior manual feature selection, which included 12 views from B-mode (videos and still images) and 3 views from pulse-wave Doppler, continuous-wave Doppler, and M-mode (still images) recordings. The model achieved an average overall test accuracy of 97.8% on videos, surpassing that of board-certified echocardiographers. A notable aspect of this model is that the image data used for training, validation, and testing were clinically randomly sampled from patients of varying ages, body types, and hemodynamic conditions (such as heart failure, valvular heart disease, aortic aneurysms, CHD, and cardiac arrest), as well as from different ultrasound instruments (e.g., General Electric, Philips, Siemens), reflecting natural variations in the view acquisition process, such as differences in zoom, depth, focus, sector width, and gain. The model, trained with real-world clinical data, is more broadly applicable to clinical practice. Furthermore, this study employed occlusion experiments and saliency mapping to confirm that the proposed CNN model’s automated recognition of echocardiographic views is based on cardiac structures within the images, which mirrors the approach of a human doctor, addressing the “black boxes” issue of AI models and potentially enhancing clinicians’ trust in AI-assisted methods. However, CNN models trained on images from general cardiology patients may not perform as well in classifying images related to CHD.

Wu et al. (51) proposed a novel DL-based CNN method for the automatic and efficient identification of commonly used standard echocardiographic views, realizing automatic identification of 23 distinct standard echocardiographic views. These views encompass not only the commonly used diagnostic views for pediatric CHD but also include M-mode views for assessing chamber sizes and LV systolic function, as well as pulse Doppler views for measuring flow velocities across valves and vessels and determining the presence of stenosis. This work is notable for being the first to cover the most frequently used diagnostic views for CHD, utilizing the largest real-world historical examination database, which includes cases of normal hearts, CHD, and postoperative CHD pediatric patients, thereby more closely approximating conventional clinical CHD data environments. Additionally, this study introduced a “knowledge distillation” method, enabling simpler models with fewer parameters to achieve recognition performance comparable to more complex, multi-parameter models. This approach maximizes accuracy and precision while maintaining network complexity, thereby improving operational efficiency.

HLHS is a severe form of CHD in which the RV and tricuspid valve (TV) alone support circulation. Without timely surgical intervention, HLHS can rapidly progress to left heart failure and death (52). Herz et al. (53) employed a fully convolutional network to segment the TV from transthoracic 3D echocardiography. The average accuracy of TV segmentation approached the limit of human eye resolution, enabling rapid modeling and quantification that provides valuable information for surgical planning in HLHS patients.

These studies demonstrate that AI methods have the potential to enhance the accuracy and efficiency of echocardiographic diagnosis, streamline the workflow of echocardiographic physicians, and lay the groundwork for high-throughput analysis of echocardiographic data.

Intelligent auxiliary diagnosis

Fetal echocardiography

Four-chamber view images provide the highest accuracy in recognizing fetal heart defects and display the most complete cardiac structures compared to other views (54). Gong et al. (55) combined a generative adversarial network (GAN) with transfer learning to propose a novel model for detecting fetal CHD, named DANomaly Generative Adversarial Convolution Neural Network (DGACNN). This model captures end-systolic A4C images of the fetal heart to distinguish between prenatal CHD and normal heart, achieving a recognition rate of 85%. Compared to cardiologists, the DGACNN model’s average accuracy was approximately 4% to 8% higher than that of doctors with junior and intermediate titles, but 2.3% lower than that of doctors with senior titles. The average accuracy of DGACNN exceeded that of doctors cross all three titles (84% vs. 81%). Arnaout et al. (56) trained a CNN model to identify five recommended fetal cardiac views—3VT, 3VV, LVOT, A4C, and abdominal view—and enabled the model to distinguish between normal and abnormal hearts. In the internal test set, the model achieved an area under the curve (AUC) of 0.99 for differentiating normal from abnormal hearts, with a sensitivity of 95% and specificity of 96%. The model’s sensitivity was comparable to that of clinicians (88% vs. 86%, P=0.3), while its specificity significantly exceeded that of clinicians (90% vs. 68%, P=0.04). The model maintained robust predictive performance when applied to external datasets and low-quality images.

Yeo et al. (57) developed a new method utilizing “intelligent navigation” technology, known as Fetal Intelligent Navigation Echocardiography (FINE). This method labels seven anatomical structures of the fetal heart and then uses “intelligent navigation” to query spatiotemporal image correlation (STIC) volume datasets to automatically generate nine standard views. The FINE method demonstrated the ability to identify a wide range of CHDs with 98% sensitivity (58). Subsequently, Veronese et al. (59) conducted a prospective study using FINE technology to successfully generate nine standard fetal echocardiographic views in the second and third trimesters of pregnancy, confirming that this technology can serve as a screening method for CHD. Since its invention, FINE has been integrated into commercial ultrasound platforms and is now referred to as “5D Heart” technology. The 5D Heart technology significantly enhances the image quality of key diagnostic elements in fetal echocardiography, reduces operator dependency, and streamlines the fetal cardiac examination process, enabling even less experienced sonographers to quickly master and apply it (60,61). Other researchers have applied FINE to the screening of fetal cardiac abnormalities and found it to have excellent diagnostic efficacy for typical CHD such as TOF (62,63) and TGA (64,65). In clinical practice, FINE has been successfully used to diagnose cases of dextrocardia with complex CHD (66), TOF with pulmonary atresia (67), and atrioventricular septal defect (68).

Duct-dependent CHD (DDCHD) is a severe form of CHD that relies on the patency of the ductus arteriosus to maintain adequate circulation, which includes conditions such as TGA, interrupted aortic arch, and CoA, which are often detected at low rates, particularly in underdeveloped countries and regions (69-71). Tang et al. (72) developed and tested a two-stage deep transfer learning model named DDCHD-DenseNet, designed to screen for critical DDCHD using the aortic arch view. The model achieved sensitivities ranging from 0.759 to 0.973 and specificities ranging from 0.759 to 0.985 across different test sets. It shows promise as an automated screening tool for stratified care and computer-aided diagnosis and has the potential to be extended to the screening of other fetal cardiac developmental defects. CoA is the most frequently missed diagnosis among fetal CHD, with fewer than one-third of cases detected during prenatal screening (73,74). Taksøe-Vester et al. (75) developed an AI model capable of recognizing standard cardiac planes and performing automatic cardiac biometrics. During 18–22 weeks fetal echocardiographic scans, the AI model automatically identified the A4C and 3VV views. From these views, it automatically measured the diameters of the atrioventricular valve, the sizes of the LA, LV, RA, and RV (including area, length, and diameter), and the diameters of the DAO, ascending aorta (AAO), and the main pulmonary artery (MPA) at the end of diastole. The model also calculated the RV/LV area ratio and the MPA/AAO diameter ratio, using these cardiac parameters to predict the risk of CoA. This time-efficient, objective, and standardized approach reduces observer variability compared to manual measurements, improving the accuracy of CoA detection and potentially enhancing outcomes for infants with CoA through early intervention and treatment.

TTE

Currently, five standard echocardiographic views are commonly used for pediatric echocardiography: the parasternal long-axis (PSLAX) view, parasternal short-axis (PSSAX) view, A4C view [or apical five-chamber (A5C) view], subxiphoid four-chamber view, and suprasternal long-axis (SSLAX) view. Wang et al. (76) proposed an end-to-end framework for the automatic analysis of multi-view echocardiograms to identify five standard echocardiographic views: PSLAX, PSSAX, A4C, subxiphoid long-axis (SXLAX) view, and SSLAX. The model achieved a diagnostic accuracy of 95.4% for CHD and 92.3% for VSD or ASD, indicating substantial potential for clinical practice. Jiang et al. (77) proposed replacing the traditional five views with seven standard views for pediatric echocardiography and validated its feasibility using a DL model. These seven views include the PSLAX view, PSSAX view, parasternal four-chamber view, parasternal five-chamber view, subxiphoid four-chamber view, subxiphoid bi-atrial view, and SSLAX view. This improved view selection method offers a more stable reflection of anatomic abnormalities associated with aortic-related CHD, such as CoA, and reveals anatomical details around the atrial septum more precisely. The DL model based on these seven standard views effectively detects pediatric CHD and demonstrates considerable robustness and practical value. VSD, ASD, and PDA are the most common CHDs in children. Tan et al. (78) incorporated Bayesian inference and dynamic neural feedback into the AI-assisted CHD diagnostic model CHDNet (Congenital Heart Disease Network). The improved feedback cell significantly enhanced the architectures’ reliability, robustness, and accuracy in distinguishing normal hearts from the three common CHDs.

AI-assisted diagnostic models developed by Borkar et al. (79) and Li et al. (80) also demonstrated excellent diagnostic performance, with an accuracy of 97.9% for ASD. Beyond binary classification, researchers have developed automated models for the localization and quantification of cardiac defects. Lin et al. (81) investigated a DL model capable of automatically processing color Doppler echocardiograms, selecting views, detecting ASD, and identifying the endpoints of the atrial septum and of the defect to quantify the size of the defect and the residual rim. Wang et al. (82) proposed a novel deep keypoint stadiometry (DKS) model for precise localization of defect endpoints and measurement of absolute distances, providing effective guidance for ASD intervention planning. Diller et al. (83) developed a new DL algorithm based on TTE, constructing convolutional networks from A4C and PSSAX views to detect potential TGA diagnoses, including TGA after atrial switch procedure or congenitally corrected TGA (ccTGA). This algorithm demonstrated an overall diagnostic accuracy of 98.0% with high performance in segmenting the systemic RV or LV, showing practical utility in complex CHD diagnostics.

PDA is a common vascular defect in preterm infants. Lei et al. (84) utilized CNN to automatically detect PDA on echocardiography, achieving an AUC of 0.88, with a sensitivity of 0.76 and specificity of 0.87. The model processes data rapidly and has the potential to be deployed on low-power devices within clinical workflows, assisting clinicians in diagnosis. Erno et al. (85) designed a novel DL model named Ultrasound Video Network (USVN) for assisting in PDA diagnosis using relevant echocardiographic images, achieving an AUC of 0.86, sensitivity of 0.80, and specificity of 0.77. This model can also be integrated into automated detection software to streamline PDA imaging workflows. This classifier represents an initial step toward semi-automated, point-of-care testing of preterm infants with PDA.

Given the complexity of echocardiographic imaging data, which requires significant expertise from operators and clinicians, AI’s powerful computational capabilities facilitate the processing and learning from these complex datasets. Developing automated diagnostic methods with AI can provide accurate diagnoses within limited time frames, enhancing efficiency and enabling early therapeutic interventions for CHD patients. Additionally, AI-assisted diagnostic methods achieving expert-level accuracy can help bridge the skill gaps caused by disparities in medical resources in remote areas, improving the accessibility of pediatric echocardiography and benefiting a broader population of CHD patients.

Cardiac function measurement

Echocardiography provides detailed information on cardiac structures, function and hemodynamic parameters. Abnormalities can lead to hemodynamic changes, affecting systolic and diastolic functions, and causing complications such as arrhythmias (86), heart failure (87), and pulmonary hypertension (88). Assessing cardiac function is crucial for evaluating the severity of CHD in children and guiding clinical treatment. While CMR is the “gold standard” for non-invasive assessment (89), its high cost and long duration make echocardiography the preferred method (90,91). AI’s ability to extract features can enable accurate identification of cardiac structures and function. Ouyang et al. (92) proposed EchoNet-Dynamic, a video-based DL algorithm that performs left ventricular segmentation and predicts ejection fraction (EF), with prediction variation comparable to human experts and significantly faster than manual assessment. This algorithm shows strong generalizability across datasets. Asch et al. (93) introduced an automated ML algorithm to quantify left ventricular ejection fraction (LVEF) without requiring endocardial boundary identification. Lau et al. (94) developed Dimensional Reconstruction of Imaging Data (DROID), a DL model that interprets TTE images and measures cardiac structure and function, including LVEF, left ventricular end-diastolic dimension (LVEDD), left ventricular end-systolic dimension (LVESD), interventricular septal wall thickness, posterior wall thickness (PWT), and left atrial anteroposterior dimension (LAAP), and links these measurements to critical cardiovascular outcomes, such as heart failure, myocardial infarction.

Zhang et al. (95) established a CNN-based, fully automated, and scalable analysis pipeline for echocardiogram interpretation, achieving recognition of 23 views and segmentation of five common views [PSLAX, PSSAX, apical two-chamber (A2C), apical three-chamber (A3C), and A4C]. The segmented output can be used for structural quantification of ventricular volumes and left ventricular mass, as well as the automatic determination of LVEF and longitudinal strain. Additionally, the models also have the capability to diagnose hypertrophic cardiomyopathy, cardiac amyloidosis, and pulmonary hypertension. This approach integrates view recognition, view segmentation, structural and functional quantification, and disease prediction, with potential applicability to CHD. Yu et al. (96) proposed a LV volume prediction method based on a backpropagation (BP) neural network, which learns and stores the complex mapping relations between 2D LV boundaries and 3D LV volumes in the BP neural network. This allows the BP neural network to represent more complex geometrical shapes, providing a closer approximation to real LV volumes and demonstrating superior performance in fetal LV volume measurements. Accurate LV volume measurement enables precise calculation of LV function parameters, such as stroke volume and EF.

Research on the assessment of cardiac diastolic function parameters is relatively limited. Tromp et al. (97) developed a fully automated DL-based echocardiographic workflow for the classification, segmentation, and annotation of 2D video and Doppler modes in echocardiography. This workflow evaluates cardiac function parameters, including ventricular volumes, LVEF, and diastolic function, such as the ratio of mitral inflow E wave to tissue Doppler e' wave (E/e'). The model reliably classifies patients into those with systolic dysfunction (LVEF <40%) and diastolic dysfunction (E/e' ratio ≥13). Compared to manual measurements by expert echocardiographers, automatic measurements exhibit lower variability, indicating that DL algorithms have the potential to replace manual annotations in echocardiography. Further research by other scholars has also confirmed that AI-based fully automated cardiac function measurements are comparable to those performed by human echocardiographers (98-100).

These studies suggest that AI algorithms hold significant clinical value by enhancing the accuracy of cardiac function parameter measurements, simplifying diagnostic workflows, and reducing time and labor costs. While these novel AI methods show promise in improving cardiac function parameter measurements in the general cardiac patient population, their applicability and predictive accuracy in CHD patients remain unclear. Further testing and validation with diverse and standardized datasets are needed to assess their performance in this specific population.

Image quality control

To reduce missed diagnosis or misdiagnosis of CHD, quality assessment of ultrasound images is essential. Abdi et al. (101) developed a deep CNN model for real-time quality assessment of single-systolic frames in A4C view, with a mean absolute error between the scores from the ultimately trained model and the expert’s manual scores of 0.71±0.58. This real-time feedback method has the potential to enhance early diagnosis and timely treatment of CHD. Subsequently, Abdi et al. (102) introduced a RNN model that utilizes the information available in sequential echo images (cine echo) to achieve real-time quality assessment of echo cine loops across five standard imaging planes: A2C, A3C, A4C, parasternal short axis at the aortic valve level (PSAXA), and parasternal short axis at the papillary muscle level (PSAXPM). Compared to the gold-standard score assigned by experienced echosonographers, this DL model achieved an average quality score accuracy of 85%. The real-time quality feedback provided by AI during the ultrasound image acquisition process assists less experienced ultrasound technicians in obtaining higher-quality images, holding the potential to be incorporated in the routine echocardiographic workflow in future (103), which promotes the adoption and application of echocardiography in low-resource settings and aids in the early and timely diagnosis of CHD. Image post-processing is also a critical factor affecting the efficiency and accuracy of disease diagnosis. Diller et al. (104) were the first to evaluate the application of a DL-based autoencoder in patients with CHD, enabling automatic denoising and removal of acoustic shadowing artefacts in A4C images, thereby improving image quality.

Multimodal AI integration

Multimodal imaging refers to the integration of various imaging techniques to comprehensively assess a patient’s condition and obtain more detailed structural and functional information (105). In the screening and diagnosis of CHD, multimodal imaging offers significant advantages by overcoming the limitations of single imaging methods and providing a comprehensive and accurate basis for diagnosis. Common multimodal imaging combinations include echocardiography-magnetic resonance imaging (MRI), echocardiography-CT, and echocardiography-angiography, which together provide a comprehensive evaluation of cardiac anatomy and function, allow precise assessment of hemodynamics, and assist clinicians in making more accurate diagnostic and therapeutic decisions (106). Swanson et al. (107) have developed a multimodal AI method combining Doppler echocardiography and computerized tomography angiography (CTA) for the specific hemodynamic study of patients with CoA. This method utilizes aortic shape data from CTA and velocity data from Doppler transthoracic echocardiography to derive velocity and pressure data, providing supplementary diagnostic insights for more rigorous CoA evaluation. This approach is particularly applicable for clinical use in resource-limited settings. Multimodal imaging has proven to be an invaluable tool in the diagnosis and management of CHD, offering enhanced diagnostic accuracy and clinical applicability.

Trustworthiness, limitations, challenges and future directions

The studies reviewed demonstrate significant advancements in AI applications for echocardiography in CHD, showcasing potential in image segmentation, diagnostic support, cardiac function measurement, image quality control and multimodal fusion. However, the trustworthiness of these studies varies. For instance, studies utilizing larger datasets and external validation frameworks [e.g., Madani et al., 2018 (50); Arnaout et al., 2021 (56); Tan et al., 2023 (78); Ouyang et al., 2020 (92); Lau et al., 2023 (94); Tromp et al., 2021 (97)] provide more robust evidence compared to those relying on small, single-center datasets or lacking independent validation [e.g., Yu et al., 2017 (40); Herz et al., 2021 (53); Borkar et al., 2018 (79); Yu et al., 2017 (96)]. Additionally, some studies are limited by retrospective designs, potential overfitting of AI models, or inadequate reporting of evaluation metrics, which constrain their reproducibility and clinical applicability [e.g., Dozen et al., 2020 (42); Lu et al., 2024 (45); Borkar et al., 2018 (79); Li et al., 2022 (80)] (Tables 2-5).

Table 2

| Source | AI methods | US type | Views | Datasets | Equipment | Purpose | Performance |

|---|---|---|---|---|---|---|---|

| Bridge et al., 2017 (38) | Regression forests | FE | 4C, LVOT, 3V | 91 short ultrasound videos from 12 subjects (gestational ages: 20–35 weeks) | Unspecified | A framework for tracking the key variables that describe the content of each frame of freehand 2D US videos of the healthy fetal heart | The classification error rate of less than 20%, prediction speeds of arounds 25 ms per frame |

| Huang et al., 2017 (39) | CNN | FE | 4C, LVOT, 3V | 91 short ultrasound videos from 12 subjects (gestational ages: 20–35 weeks) | Unspecified | A temporal CNN for fetal heart analysis in cardiac screening videos to predict multiple key variables relating to the fetal heart, including the visibility, viewing plane, location and orientation of the heart | The error rates in a real-world fetal cardiac video dataset of 21.6% |

| Yu et al., 2017 (40) | CNN | FE | Long-axis view | Training: 10 sequences | Unspecified | A dynamic CNN to segment fetal LV in echocardiographic sequences | HD =1.2648 mm, MAD =0.2016 mm, DICE =94.5%, average computational time =25.4 s |

| Test: 41 sequences | |||||||

| Xu et al., 2020 (41) | CNN | FE | A4C | A total of 895 images in original dataset using a five-fold cross validation scheme to develop model | Unspecified | An end-to-end DW-Net for accurate segmentation of seven important anatomical structures in the A4C view, including LA, RA, LV, RV DAO, epicardium and thorax | DSC =0.827, PA =0.933, AUC =0.990, substantially outperformed some well-known segmentation methods |

| Dozen et al., 2020 (42) | CNN | FE | A4C | 615 images from 421 US videos, a ratio of the training to test data =2:1 | GE Voluson E8/E10 | A novel segmentation method named Cropping-Segmentation-Calibration (CSC) that is specific to the ventricular septum in US videos | mIoU =0.5543, significantly higher than other models (0.0224 and 0.1519) |

| Qiao et al., 2022 (44) | CNN | FE | 4-chamber views | Training: 1,000 images | Unspecified | An intelligent feature learning detection system to visualize fetal 4-chamber views | Precision =0.919, recall =0.871, F1-score =0.944, mAP =0.953, FPS =43 |

| Test: 250 images | |||||||

| Lu et al., 2024 (45) | CNN | FE | 4-chamber views, including A4C, parasternal four-chamber and basal four-chamber view | Training: 973 images | Samsung, GE, Philips | A YOLOX-based deep instance segmentation neural network or cardiac anatomical structure location and segmentation in fetal ultrasound images, including LV, LA, LV, RV, IAS, IVS, RIB, DAO, SP, LVW, RVW, LL and RL | AP =0.835, speed =0.0815 s per view |

| Test: 200 images | |||||||

| Stoean et al., 2021 (48) | CNN | FE | A4C | Training and validation: 5,755 images | GE Voluson E10/E8/E6 | A DL architecture to detect the presence/absence of key frames (the aorta, the arches, the atrioventricular flows and the crossing of the great vessels) from A4C view at first trimester | Test accuracy =95%, F1-score ranging from 90.91% to 99.58% for the four key perspectives |

| Test: 1,496 images | |||||||

| Wu et al., 2023 (49) | CNN | FE | A4C, 3VC, 3VT, RVOT, LVOT | Training and validation: 3,360 images | Mindray DC-8/Resona 8/Rietta 70, Hitachi HI Vision Preiru, GE Voluson E10/E8/E6/Logiq P6/Expert 730, Erlangshen, Acoustics Aixlorer, Siemens Sequoia 512 | A method identifying the anatomical structures of different fetal heart sections and judging the standard sections according to these anatomical structures | The map value of 94.30% in identifying different anatomical structures, the average accuracy rate of 94.60%, the average recall rate of 91.0%, and the average F1 coefficient of 93.40%. |

| Test: 428 images | |||||||

| Madani et al., 2018 (50) | CNN | TTE | 2DE (video and still images): PSLA, RV inflow, sax-basal, sax-mid, A4C, A5C, A2C, A3C, sub4C, IVC, subAO, supAO | A total of 223,787 images, randomly split into training, validation, and test datasets in 80:10:10 ratio | GE, Philips, Siemens | A CNN model to recognizes features and classify 15 standard views for AI-assisted echocardiographic interpretation | Overall test accuracy of 97.8% in classifying 12 video views |

| Accuracies of 98%, 83% and 99% in classifying CW, PW, and M-mode respectively | |||||||

| Doppler: PW, CW, M-mode | Average overall accuracy of 91.7% in classifying 15 still views (vs. 70.2–84.0% for board-certified echocardiographers) | ||||||

| Wu et al., 2022 (51) | CNN | TTE | sub4C, subSALV, subSAS, subCAS, subRVOT, A4C, A5C, LPS4C, LPS5C, sax-basal, sax-mid, PSLV, PSPA, supAO, DPMV, DP-TV, DPAAO, DP-PV, DP-DAO, DP-OTHER, M-AO, M-LV, M-OTHER | Training: 247,750 images | Philips iE33/EPIC 7C | A new deep learning-based neural network method to automatically identify commonly used standard echocardiographic views | Precision =0.848, sensitivity =0.865, specificity =0.994, F1 scores of a majority of views ≥0.90 |

| Test: 119,821 images | |||||||

| Herz et al. 2021 (53) | FCN | TTE | A field of 3DE view that captured the TV annulus and leaflets | Training: 133 3DE images | Philips IE33/EPIQ 7 | Fast and accurate FCN-based segmentation of the TV for rapid modeling and quantification | An average DSC of 0.77 (IQR: 0.73–0.81) and MBD of 0.6 (IQR: 0.44–0.74) mm for individual TV leaflet segmentation |

| Test: 28 3DE images | The addition of commissural landmarks improved individual leaflet segmentation accuracy to an MBD of 0.38 (IQR: 0.3–0.46) mm |

2D, two-demensional; 2DE, two-demensional echocardiographic; 3DE, three-demensional echocardiographic; 3V, three vessels; 3VC, three vessel catheter; 3VT, three vessel trachea; A2C, apical two chamber; A3C, apical three chamber/apical long axis; A4C, apical four-chamber view; A5C, apical five-chamber view; AI, artificial intelligence; AP, average precision; AUC, area under the curve; CNN, convolutional neural network; CW, continuous-wave Doppler; DAO, descending aorta; DICE, dice coefficient; DL, deep learning; DPAAO, Doppler recording from the ascending aorta; DP-DAO, Doppler recording from the descending aorta; DPMV, Doppler recording from the mitral valve; DP-OTHER, Doppler recording from other Doppler recordings; DP-PV, Doppler recording from the pulmonary valve; DP-TV, Doppler recording from the tricuspid valve; DSC, dice similarity coefficient; FCN, fully convolutional network; FE, fetal echocardiography; FPS, frames per second; HD, Hausdorff distance; IAS, interatrial septum; IQR, interquartile range; IVC, inferior vena cava; IVS, interventricular septum; LA, left atrium; LL, left lung; LPS4C, low parasternal four-chamber view; LPS5C, low parasternal five-chamber view; LV, left ventricle; LVOT, left ventricular outflow tract; LVW, left ventricular wall; MAD, mean absolute distance; M-AO, M-mode of the aortic; MBD, mean boundary distance; mAP, mean average precision; mIoU, mean intersection over union; M-LV, M-mode of left ventricle at the level of the papillary muscle; M-OTHER, other M-mode; PSLA, parasternal long axis; PSLV, parasternal long-axis view of the left ventricle; PSPA, parasternal view of the pulmonary artery; PW, pulsed-wave Doppler; RA, right atrium; RIB, rib; RL, right lung; RNN, recurrent neural network; RV, right ventricle; RVOT, right ventricular outflow tract; RVW, right ventricular wall; sax-basal, parasternal short-axis view at the base of the heart; sax-mid, parasternal short-axis view at the level of the mitral valve; SP, spine; sub4C, subcostal four-chamber view; subAO, subcostal/abdominal aorta; subCAS, subcostal coronal view of the atrium septum; ubRVOT, subcostal short-axis view through the right ventricular outflow tract; subSALV, subcostal short-axis view of the left ventricle; subSAS, subcostal sagittal view of the atrium septum; supAO, suprasternal aorta/aortic arch; TTE, transthoracic echocardiography; TV, tricuspid valve; US, ultrasound.

Table 3

| Source | AI methods | US type | Views | Diagnosed CHD types | Datasets | Equipment | Purpose | Performance |

|---|---|---|---|---|---|---|---|---|

| Gong et al., 2020 (55) | CNN | FE | A4C, A5C, 3VT, 3VV | Fetal CHD | Training and validation: 3,596 images | GE Voluson E8/E10, Philips EPIQ 7C | A new model named DGACNN to recognize fetal CHD | Rate of recognizing fetal heart defects: 85%, higher than other networks and expert cardiologists by 1–20% |

| Test: 400 images | ||||||||

| Arnaout et al., 2021 (56) | CNN | FE | 3VT, 3VV, LVOT, A4C, ABDO | Fetal CHD | Training and validation: 107,823 images | GE, Siemens, Philips, Hitachi | An ensemble of neural networks to identify recommended cardiac views and distinguish between normal hearts and complex CHD | AUC =0.99, sensitivity =95% (95% CI: 84–99%), specificity =96% (95% CI: 95–97%), and negative predictive value =100% in distinguishing normal from abnormal hearts |

| Test: 19,822 images from FETAL-125, 329,405 images from OB-125, 4,473,852 from OB-4000, 44,512 images from BCH-400, 10 sets of twins from TWINS-10 | ||||||||

| Yeo et al., 2013 (57) | VIS-assistance | FE | A4C, A5C, LVOT, short-axis view of great vessels/RVOT, 3VT, ABDO/stomach, ductal arch, aortic arch, SVC/IVC | Fetal CHD | Training and validation: 51 STIC volume | GE Voluson E8 | FINE marking seven anatomical structures of the fetal heart to generate nine standard fetal echocardiography views in normal hearts by applying “intelligent navigation” technology to STIC volume datasets | Success rates for generating nine fetal echocardiography views: 98–100% |

| Test: 50 STIC volume, 4 cases with proven CHD | ||||||||

| Tang et al., 2023 (72) | CNN | FE | The aortic arch view | Duct-dependent CHDs in the aortic arch, including TGA, CoA, IAA | Pre-training: 2,800 images | GE Voluson E6/E8/E10/Vivi9/Logqi E9-3, Philips iE33, SSD-A (10), Aloka | A two-stage deep transfer learning model named DDCHD-DenseNet for screening critical duct-dependent CHDs | Internal datasets: AUC =0.935, sensitivity =0.843, and specificity =0.967 in duct-dependent CHD screening; prediction rate: 91.4% in TGA, 74.2% in CoA, 100% in IAA, and 96.7% in normal heart |

| Training: 3,912 images | ||||||||

| Test: 350 images | External datasets: in the SZLG and GDPH datasets, AUC =0.885 and 0.826, sensitivity =0.769 and 0.759, and specificity =0.956 and 0.759 in duct-dependent CHD screening, respectively; prediction rate in SZLG: 63.6% in TGA, 100% in CoA, and 66.7% in IAA; prediction rate in GDPH: 68.8% in TGA, 74.1% in CoA, and 90.9% in IAA | |||||||

| External test: 161 images from GZMC, 85 images from SZLG, 108 images from GDPH | ||||||||

| Taksøe-Vester et al., 2024 (75) | CNN | FE | A4C, 3VV | CoA | 73 CoA cases vs. 7,300 healthy controls | GE Logiq 7/Voluson E6/E8/E10/E11 | An AI model to scan CoA during the 18–22 weeks | AUC =0.96, sensitivity =90.4% (95% CI: 83.7–97.2%), specificity =88.9% (95% CI: 88.2–89.6%), PPV =0.33% (95% CI: 0.2–0.5%), NPV =99.9% (95% CI: 99.9–100%), LR+ =8.17 (95% CI: 7.40–9.02) and LR– =0.11 (95% CI: 0.05–0.23) |

| Wang et al., 2021 (76) | CNN | TTE | PLAX of left ventricle, PSAX of the aorta, SXLAX of two atria, SSLAX of aortic arch | VSD, ASD | 1,380 subjects (each patients with 1–5 views of US videos), randomly divided into 90% training and 10% test | Philips iE 33/iE Elite/EPIQ 7C | A practical end-to-end framework automatically analyzing the multi-view echocardiograms | Accuracy =95.4% in diagnosing CHD or negative samples, and accuracy =92.3% in diagnosing negative, VSD or ASD samples |

| Jiang et al., 2023 (77) | CNN | TTE | Unspecified | CHD | 14,838 images, training:validation:test =8:1:1 | Philips iE 33/EPIQ5/EPIQ 7C | A new method of view selection named the seven views approach to analyze pediatric echocardiographic images in pediatric CHD | AUC =0.91, accuracy =92.3% |

| Tan et al., 2023 (78) | CNN | TTE | Unspecified | VSD, PDA, ASD | Training and validation: 4,151 videos | Philips iE 33 | A CHDNet model embedded with Bayesian inference and dynamic neural feedback to detect three common congenital heart defects (VSD, PDA, and ASD) | Internal test: AUC of 100%, 100% and for VSD, ASD and PDA |

| Internal test: 1,037 videos | External test: AUC of 92%, 73% and 57% for VSD, ASD and PDA | |||||||

| External test: 692 videos | ||||||||

| Borkar et al., 2018 (79) | SVM | TTE | Unspecified | DCM, ASD | 439 frames | Philips iE33/EPIQ5/EPIQ 7C | An automatic machine learning approach to detect the ASD and DCM | Accuracy =99.53%, 97.97% and 97.40% in normal cases, ASD and DCM; the overall classification accuracy =98.30% |

| Li et al., 2022 (80) | CNN | TTE | Unspecified | ASD | Training: 19,029 images | Philips IE33/EPIC 7C | An AI-assisted diagnostic model for ASD diagnosis using echocardiography | Diagnostic accuracy =97.9%, false negative rate =2.8%, false positive rate =0, significantly superior to other models (P<0.05) |

| Test: about 12,600 | ||||||||

| Lin et al., 2023 (81) | CNN | TTE | A4C, PSAX, SC2A | ASD | 3,404 images | Phillips iE-Elite/7C, GE Vivid E95 | A DL framework applicable to color Doppler echocardiography for automatic detection and quantification of ASD | Internal test: average accuracy =99% |

| External test: AUC =0.92, sensitivity =88% and specificity =89% | ||||||||

| Measurement capability of the size of defect and residual rim: the mean biases =1.9 mm and 2.2 mm | ||||||||

| Wang et al., 2022 (82) | CNN | TTE | PSAX of the aorta, SXLAX of two atria, A4C | ASD | 3,474 images | Philips iE33/IE ELITE/EPIQ 7C | A DKS model to detect ASD and assist in the planning of the closure treatments | Accuracy: 94.25% (within-center) and 93.85% (cross-center), better than other models |

| Occluder size prediction: MAE =0.7341 mm, QWK =0.9027, indicating high agreement with ground truth | ||||||||

| Diller et al., 2019 (83) | CNN | TTE | A4C, PSAX | TGA | Over 100,000 images, divided into 80% training and validation and 20% test | Unspecified | A novel DL algorithm in recognizing TGA after atrial switch procedure or ccTGA | In A4C view, accuracy =95.5% and 94.4% in training and test set, respectively; diagnostic accuracy =86.2% and 91.6% for ccTGA and TGA, respectively |

| In PSAX of two-chamber view, accuracy =96.7% and 76.8% in training and test set, respectively; diagnostic accuracy =49.2% and 86.8% for ccTGA and TGA, respectively | ||||||||

| Lei et al., 2022 (84) | CNN | TTE | Ductal view | PDA | 461 videos, 316 for training, 74 for validation, 72 for test | Unspecified | A CNN models for detection of PDA in echocardiogram video clips | AUC =0.88, PPV =0.84, NPV =0.80, sensitivity =0.76 and specificity =0.87 |

| Erno et al., 2023 (85) | CNN | TTE | PSSV, SSSV | PDA | 1,145 video clips for training and validation, about1,380 video clips for test | Unspecified | A deep learning model to identify PDA presence in relevant echocardiographic images | Clip-level classification: sensitivity =0.80, specificity =0.77 and AUC =0.86 |

| Study-level classification: sensitivity =0.83, specificity =0.89 and AUC =0.93 |

3VT, three-vessels and trachea; 3VV, three-vessel view; A4C, apical four chamber; A5C, apical five chamber; ABDO, abdomen; AI, artificial intelligence; ASD, atrial septal defect; AUC, area under the curve; ccTGA, congenitally corrected TGA; CHD, congenital heart disease; CHDNet, Congenital Heart Disease Network; CI, confidence interval; DDCHD, duct-dependent congenital heart disease; DGACNN, DANomaly Generative Adversarial Convolution Neural Network; CNN, convolutional neural network; CoA, coarctation of the aorta; DCM, dilated cardiomyopathy; DKS, deep keypoint stadiometry; DL, deep learning; FE, fetal echocardiography; FINE, Fetal Intelligent Navigation Echocardiography; GDPH, Guangdong Provincial People’s Hospital; GZMC, Guangzhou Women and Children’s Medical Center; IAA, interrupted aortic arch; IVC, inferior vena cava; LR, likelihood ratio; LVOT, left ventricular outflow tract; MAE, mean absolute error; ML, machine learning; NPV, negative predictive value; PDA, patent ductus arteriosus; PLAX, parasternal long axis view; PPV, positive predictive value; PSAX, parasternal short-axis; PSSV, parasternal sagittal view; QWK, quadratic weighted kappa; RVOT, right ventricular outflow tract; SC2A, the subxiphoid sagittal view; SSLAX, suprasternal long-axis; SSSV, suprasternal sagittal view; STIC, spatiotemporal image correlation; SVC, superior vena cava; SVM, support vector machine; SXLAX, subxiphoid long-axis; SZLG, Shenzhen Longgang Maternal and Child Health Hospital; TGA, transposition of the great arteries; TTE, transthoracic echocardiography; US, ultrasound; VIS, virtual intelligent sonographer; VSD, ventricular septal defect.

Table 4

| Source | AI methods | US type | Views | Quantitative indicators | Datasets | Equipment | Purpose | Performance |

|---|---|---|---|---|---|---|---|---|

| Ouyang et al., 2020 (92) | CNN | TTE | A4C | EF | Training and validation: 8,742 images | Philips iE33/Epiq 5G/Epiq 7C, Siemens Sonos/Acuson SC2000 | A video-based deep learning algorithm—EchoNet-Dynamic—that executes tasks of segmenting the left ventricle, estimating ejection fraction and assessing cardiomyopathy | Internal datasets: LV segmentation: a Dice similarity coefficient =0.92; EF estimation: MAE =4.1%; disease classification by decreased EF: AUC =0.97 |

| Test: 1,288 images | External datasets: EF estimation: MAE =6.0%; disease classification: AUC =0.96 | |||||||

| External validation: 2,895 images | ||||||||

| Asch, et al., 2019 (93) | Unconventional ML | TTE | A4C, A2C | LVEF | Training: 51,920 images | Siemen ACUSON/SC2000, Philips iE33/EPIQ 7C/CX50/SEQUOIA, GE Vivid-I/Vivid 7 | Fully automated estimation of LVEF on any pair of A2C and A4C | Prediction capability in 99 patients: MAD =2.9%±2.0%, R=0.95 (P<0.001), ICC =0.02, a minimal bias of 1.0% with LOA of ±12.1% |

| Test: 297 pairs of apical views from 99 patients | ||||||||

| Lau et al., 2023 (94) | CNN | TTE | A4C, A2C, PLAX | LA dimension, LV wall thickness, chamber diameter, EF | Training and validation: 44,959 images | Unspecified | A deep learning model (DROID) for the automated measurement of left heart structure and function from echocardiographic images | Prediction capability in internal datasets: MAE =2.87 mm and R2 =0.74 for LAAP, MAE =2.33 mm and R2 =0.86 for LVEDD, MAE =2.19 mm and R2 =0.90 for LVESD, MAE =1.11 mm and R2 =0.62 for IVS, MAE =1.04mm and R2 =0.49 for PWT, MAE =4.39% and R2 =0.86 for LVEF |

| Test: 9,848 images | ||||||||

| External validation: 9,256 images from C3PO, 10,030 images from EchoNet-Dynamic | Prediction capability in external datasets (C3PO): MAE =2.52 mm and R2 =0.76 for LAAP, MAE =2.09 mm and R2 =0.81 for LVEDD, MAE =2.04 mm and R2 =0.82 for LVESD, MAE =0.99 mm and R2 =0.71 for IVS, MAE =0.93 mm and R2 =0.59 for PWT, MAE =4.23% and R2 =0.74 for LVEF | |||||||

| Zhang et al., 2018 (95) | CNN | TTE | PLAX, PSAX, A2C, A3C, A4C | Cardiac structure: lengths, areas, volumes, and mass estimates | Cardiac structure: 8,666 images | Philips IE33/EPlQ 7C/HD15, GE Vivid E9/E9/I, Siemens Acuson Sequoia, and other equipment | A fully automated pipeline for assessment of cardiac structure, function, and disease detection | Cardiac structure: the median absolute deviation for cardiac structures was in the 15% to 17% range, with LV end-systolic volume being the least consistent at 26% (9 mL) |

| Cardiac function: LVEF, longitudinal strain | Cardiac function: 6,943 images | Cardiac function: automated LVEF values deviating from manual values by an absolute value of 6% (relative value of 9.7%) and longitudinal strain deviating by an absolute value of 1.4% (relative value of 7.5%) | ||||||

| Yu et al., 2017 (96) | BP neural network | FE | Four-chamber view | LV volume, SV, EF | 50 images, training vs. test =1:1 | GE VOLUSON 730 | A BP neural network method to predict LV volume, and then calculate SV and EF | The consistence between BP neural network and volume references: the average bias =0.03 mL (95% CI: −0.15 to 0.20 mL) for SV, the average bias =0 mL (95% CI: −0.13 to 0.13 mL) for EF |

| Tromp et al., 2022 (97) | CNN | TTE | 2D videos: A4C, A2C, PLAX, and other views | e', E/e', LVEF | Training: 1,145 images from ATTRaCT | Unspecified | Automated interpretation of LV systolic function (EF) and diastolic function (E/e' ratio) | ATTRaCT: chamber segmentation: a mean Dice similarity coefficient (a measure of similarity of the annotations) ranging from 93.0% to 94.3%; the correlation between automated and manual measurements: r=0.89 (MAE 5.5%) for LVEF, r=0·92 (MAE 0.7 cm/s) for e' lateral, and r=0.90 (MAE 1.7) for E/e' ratio |

| Test: 406 images | ||||||||

| Doppler modalities: PWTDI (lateral, medial, and TrV), M-mode (TrV and other views), pulsed wave (mitral valve and other views), continuous wave (TrV, aortic outflow velocity, and other views) | External validation: 1,029 images from Alberta HEART, 31,241 images from Mackay Memorial Hospital, 10,030 images from EchoNet-Dynamic | Cardiac function identification: AUC =0.96 for systolic dysfunction (LVEF <40%), AUC =0.96 for diastolic dysfunction (E/e' ≥13) | ||||||

| External datasets: the correlation between automated and manual measurements: MAE =6–10% for LVEF, MAE =1.8–2.2) for E/e' ratio | ||||||||

| Cardiac function identification: AUC =0.90–0.92 for systolic dysfunction (LVEF <40%), AUC =0.91 for diastolic dysfunction (E/e' ≥13) |

A2C, apical two-chamber; A3C, apical three-chamber; A4C, apical four-chamber; ATTRaCT, Asian Network for Translational Research and Cardiovascular Trials; AUC, area under curve; BP, backpropagation; C3PO, Community Care Cohort Project; CI, confidence interval; CNN, convolution neural network; DROID, Dimensional Reconstruction of Imaging Data; EF, ejection fraction; FE, fetal echocardiography; GE, General Electric; ICC, intraclass correlation; IVS, interventricular septal wall thickness; LA, left atrial; LAAP, left atrial anteroposterior dimension; LOA, limits of agreement; LV, left ventricular; LVEDD, left ventricular end diastolic dimension; LVEF, left ventricular ejection fraction; LVESD, left ventricular end systolic dimension; MAD, mean absolute distance; MAE, mean absolute error; ML, machine learning; PLAX, parasternal long axis; PSAX, parasternal short axis; PWT, posterior wall thickness; PWTDI, pulsed-wave Doppler tissue imaging; SV, stroke volume; TrV, tricuspid regurgitation peak velocity; TTE, transthoracic echocardiography; US, ultrasound.

Table 5

| Source | AI methods | US type | Views | Datasets | Equipment | Purpose | Performance |

|---|---|---|---|---|---|---|---|

| Abdi et al., 2017 (101) | CNN | TTE | A4C | 6,916 images, partitioned into 80% training-validation and 20% test | Philips iE33 | Real-time evaluation of A4C image quality during ultrasound image acquisition (within 10 ms) | A mean absolute error of 0.72±0.59 when compared with the expert’s manual echo scores |

| Abdi et al., 2017 (102) | CNN | TTE | A2C, A3C, A4C, PSAXA, PSAXPM | 2,450 echo cines (from 509 separate patients), partitioned into 80% training-validation and 20% test | Philips, GE | Real-time evaluation of five views image quality during ultrasound image acquisition (20 frame echo sequence within 10 ms) | A mean quality score accuracy of 85% compared to the gold-standard score assigned by experienced echosonographers |

| Diller et al., 2019 (104) | DNN | TTE | A4C | Training: 92,918 images from patients with CHD and 20,000 images from subjects with normal heartTest: 60,502 images from patients with CHD, 4,354 images from subjects with normal heart | Unspecified | Automatic denoising and artefact removal of A4C images in echocardiographic post-processing stage | The denoising and deartifact images are similar to the original images to the naked eye, and the image quality improvement effect is significant in quantitative analysis (AE trained on CHD and normal hearts vs. baseline after adding noise), denoising: cross-entropy =0.2899±0.0441 vs. 2.7603±0.3116, squared difference =161.20±23.08 vs. 1926.86±27.76; artefact removal: cross-entropy =0.2744±0.0435 vs. 1.5518±0.5904, squared difference =107.00±49.87 vs. 453.29±242.22 |

A2C, apical two-chamber; A3C, apical three-chamber; A4C, apical four-chamber; AE, autoencoder; AI, artificial intelligence; CHD, congenital heart disease; CNN, convolution neural network; DNN, deep neural network; FE, fetal echocardiography; PSAXA, parasternal short axis at the aortic valve level; PSAXPM, parasternal short axis at the papillary muscle level; TTE, transthoracic echocardiography; US, ultrasound.

Besides, there still are several limitations hindering the application of AI in the automated analysis of echocardiography. These include: (I) bias in AI algorithms: AI algorithms are prone to biases stemming from underrepresentation of certain demographic groups, such as different ethnicities or socioeconomic backgrounds. This can lead to disparities in clinical outcomes, particularly in pediatric populations with unique physiological and pathological characteristics. The current studies contained a limited number of positive CHD cases available for training and testing, particularly rare types of CHD; a scarcity of studies employing external datasets for validation, which may be account for prediction bias and undermines the model’s clinical applicability; (II) low-quality data and overfitting: models trained on limited or noisy datasets may suffer from overfitting, performing well on training data but poorly in real-world applications. In certain research, AI-assisted automated systems often demonstrate inferior recognition and measurement capabilities compared to manual interpretation when dealing with suboptimal quality images. These issues underscore the necessity for high-quality, diverse, and annotated datasets; (III) ethical and regulatory challenges: implementing AI in pediatric care raises ethical concerns, including safeguarding data privacy and ensuring that parents or guardians provide informed consent. Additionally, the lack of universal regulatory standards for AI deployment in clinical settings may delay adoption and complicate cross-institutional integration; (IV) ethical and regulatory challenges: implementing AI in pediatric care raises ethical concerns, including safeguarding data privacy and ensuring that parents or guardians provide informed consent. Additionally, the lack of universal regulatory standards for AI deployment in clinical settings may delay adoption and complicate cross-institutional integration.

These limitations highlight the need for future research to emphasize rigorous study design and transparent reporting. We propose several future directions for AI-driven echocardiography in CHD management. First, researchers could explore emerging technologies, such as generative AI, to create synthetic images for model training. This would address privacy and data security concerns while improving model robustness. Additionally, integrating multimodal imaging (echocardiography, MRI, and CT) could enhance diagnostic accuracy and clinical applicability. AI models combining these modalities may overcome individual limitations and improve patient outcomes. Furthermore, AI’s application in other CHD diagnostic tools, such as auscultation, electrocardiography, and genetic testing, could lead to new integrated screening methods (108). On data sources, strengthening multicenter, cross-regional, and cross-cultural collaborations will expand real-world CHD imaging databases and increase diversity in study populations. Incorporating various ultrasound devices would improve model generalizability. Lastly, future research should focus on AI’s role in guiding CHD treatments, particularly in surgical and interventional procedures, such as determining occluder sizes. These advancements could significantly enhance clinical decision-making and patient care.

AI in medical imaging faces several specific challenges, including: (I) regulatory challenges: AI technologies must undergo rigorous validation and approval by bodies like the Food and Drug Administration (FDA) and European Medicines Agency (EMA), a time-consuming process that may delay clinical implementation; (II) cost issues: while the initial investment in AI is high, its potential lies in reducing long-term healthcare costs through improved diagnostic accuracy, minimized human error, and optimized workflow; (III) system integration: integrating AI into existing clinical workflows presents challenges in ensuring compatibility with current systems and providing effective training for clinicians; and (IV) clinical acceptance: clinician trust and acceptance are key to successful AI implementation.

To address these challenges, we recommend: (I) strengthening data privacy and security regulations; (II) promoting cross-sector collaboration to ensure compatibility between new technologies and existing healthcare processes; (III) investing in workforce training and technological infrastructure; and (IV) embracing emerging technologies: Using generative AI for pediatric cardiac imaging training to address data privacy and availability concerns. Additionally, ensuring equity in AI development is crucial. Strategies include: (I) diversifying datasets: ensuring AI training datasets represent different genders, age groups, and ethnicities; (II) promoting transparency: requiring fairness reports and regular algorithm reviews to address biases; and (III) implementing targeted policies: developing standards to prevent unfair outcomes in AI applications.

Conclusions

This review provides a comprehensive and narrative synthesis of the application of AI in CHD echocardiographic imaging, covering image segmentation, assistive diagnosis, cardiac function measurement, image quality control, and multimodal fusion. It discusses the role of AI in both prenatal and postnatal CHD evaluation and highlights research gaps, offering insights for future studies. However, there are limitations in the literature reviewed, which is primarily from Europe, America, and East Asia (China and Japan), introducing regional bias. Few studies from other regions, such as South and Central Asia, Latin America, or Africa, limit the review’s generalizability. Future research should include studies from diverse regions to enhance the review’s completeness.

In conclusion, AI’s rapid development has significantly impacted cardiovascular medicine, improving CHD diagnosis and treatment. AI algorithms in echocardiographic imaging offer automation, reproducibility, and enhanced image acquisition, reconstruction, and analysis, bridging skill gaps and improving clinical outcomes for CHD patients. As AI evolves, it may transform medical practice, advance precision medicine, and become a valuable tool in CHD diagnosis and treatment.

Acknowledgments

None.

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editor (Paolo Scanagatta) for the series “Pediatric Medicine and Surgery at the beginning of Artificial Intelligence Era” published in Pediatric Medicine. The article has undergone external peer review.

Reporting Checklist: The authors have completed the Narrative Review reporting checklist. Available at https://pm.amegroups.com/article/view/10.21037/pm-24-72/rc

Peer Review File: Available at https://pm.amegroups.com/article/view/10.21037/pm-24-72/prf

Funding: None.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://pm.amegroups.com/article/view/10.21037/pm-24-72/coif). The series “Pediatric Medicine and Surgery at the beginning of Artificial Intelligence Era” was commissioned by the editorial office without any funding or sponsorship. G.H. serves as the Editor-in-Chief of Pediatric Medicine. The authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Zhang J, Xiao S, Zhu Y, et al. Advances in the Application of Artificial Intelligence in Fetal Echocardiography. J Am Soc Echocardiogr 2024;37:550-61. [Crossref] [PubMed]

- Ma K, He Q, Dou Z, et al. Current treatment outcomes of congenital heart disease and future perspectives. Lancet Child Adolesc Health 2023;7:490-501. [Crossref] [PubMed]

- van der Bom T, Zomer AC, Zwinderman AH, et al. The changing epidemiology of congenital heart disease. Nat Rev Cardiol 2011;8:50-60. [Crossref] [PubMed]

- Chen X, Zhao S, Dong X, et al. Incidence, distribution, disease spectrum, and genetic deficits of congenital heart defects in China: implementation of prenatal ultrasound screening identified 18,171 affected fetuses from 2,452,249 pregnancies. Cell Biosci 2023;13:229. [Crossref] [PubMed]

- Zhao QM, Liu F, Wu L, et al. Prevalence of Congenital Heart Disease at Live Birth in China. J Pediatr 2019;204:53-8. [Crossref] [PubMed]

- Pruetz JD, Wang SS, Noori S. Delivery room emergencies in critical congenital heart diseases. Semin Fetal Neonatal Med 2019;24:101034. [Crossref] [PubMed]

- Makkar N, Satou G, DeVore GR, et al. Prenatal Detection of Congenital Heart Disease: Importance of Fetal Echocardiography Following Normal Fetal Cardiac Screening. Pediatr Cardiol 2024;45:1203-10. [Crossref] [PubMed]

- Stout KK, Daniels CJ, Aboulhosn JA, et al. 2018 AHA/ACC Guideline for the Management of Adults With Congenital Heart Disease: A Report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines. Circulation 2019;139:e698-800. [PubMed]

- Mcleod G, Shum K, Gupta T, et al. Echocardiography in Congenital Heart Disease. Prog Cardiovasc Dis 2018;61:468-75. [Crossref] [PubMed]

- Roos-Hesselink JW, Pelosi C, Brida M, et al. Surveillance of adults with congenital heart disease: Current guidelines and actual clinical practice. Int J Cardiol 2024;407:132022. [Crossref] [PubMed]

- Feltes TF, Bacha E, Beekman RH 3rd, et al. Indications for cardiac catheterization and intervention in pediatric cardiac disease: a scientific statement from the American Heart Association. Circulation 2011;123:2607-52. [Crossref] [PubMed]

- Bouma BJ, Mulder BJ. Changing Landscape of Congenital Heart Disease. Circ Res 2017;120:908-22. [Crossref] [PubMed]

- Bizopoulos P, Koutsouris D. Deep Learning in Cardiology. IEEE Rev Biomed Eng 2019;12:168-93. [Crossref] [PubMed]

- Dey D, Slomka PJ, Leeson P, et al. Artificial Intelligence in Cardiovascular Imaging: JACC State-of-the-Art Review. J Am Coll Cardiol 2019;73:1317-35. [Crossref] [PubMed]

- Krittanawong C, Zhang H, Wang Z, et al. Artificial Intelligence in Precision Cardiovascular Medicine. J Am Coll Cardiol 2017;69:2657-64. [Crossref] [PubMed]

- Krittanawong C, Johnson KW, Rosenson RS, et al. Deep learning for cardiovascular medicine: a practical primer. Eur Heart J 2019;40:2058-73. [Crossref] [PubMed]

- Deo RC. Machine Learning in Medicine. Circulation 2015;132:1920-30. [Crossref] [PubMed]

- Wang SY, Pershing S, Lee AY, et al. Big data requirements for artificial intelligence. Curr Opin Ophthalmol 2020;31:318-23. [Crossref] [PubMed]

- Currie G, Hawk KE, Rohren E, et al. Machine Learning and Deep Learning in Medical Imaging: Intelligent Imaging. J Med Imaging Radiat Sci 2019;50:477-87. [Crossref] [PubMed]

- Johnson KW, Torres Soto J, Glicksberg BS, et al. Artificial Intelligence in Cardiology. J Am Coll Cardiol 2018;71:2668-79. [Crossref] [PubMed]

- Yamashita R, Nishio M, Do RKG, et al. Convolutional neural networks: an overview and application in radiology. Insights Imaging 2018;9:611-29. [Crossref] [PubMed]

- Oikonomou EK, Siddique M, Antoniades C. Artificial intelligence in medical imaging: A radiomic guide to precision phenotyping of cardiovascular disease. Cardiovasc Res 2020;116:2040-54. [Crossref] [PubMed]

- Chartrand G, Cheng PM, Vorontsov E, et al. Deep Learning: A Primer for Radiologists. Radiographics 2017;37:2113-31. [Crossref] [PubMed]

- Xia Y, Chen L, Lu J, et al. The comprehensive study on the role of POSTN in fetal congenital heart disease and clinical applications. J Transl Med 2023;21:901. [Crossref] [PubMed]

- Zhao QM, Ma XJ, Ge XL, et al. Pulse oximetry with clinical assessment to screen for congenital heart disease in neonates in China: a prospective study. Lancet 2014;384:747-54. [Crossref] [PubMed]

- van Nisselrooij AEL, Teunissen AKK, Clur SA, et al. Why are congenital heart defects being missed? Ultrasound Obstet Gynecol 2020;55:747-57. [Crossref] [PubMed]

- AIUM Practice Parameter for the Performance of Fetal Echocardiography. J Ultrasound Med 2020;39:E5-E16. [PubMed]

- Haberer K, He R, McBrien A, et al. Accuracy of Fetal Echocardiography in Defining Anatomic Details: A Single-Institution Experience over a 12-Year Period. J Am Soc Echocardiogr 2022;35:762-72. [Crossref] [PubMed]

- Mitchell C, Rahko PS, Blauwet LA, et al. Guidelines for Performing a Comprehensive Transthoracic Echocardiographic Examination in Adults: Recommendations from the American Society of Echocardiography. J Am Soc Echocardiogr 2019;32:1-64. [Crossref] [PubMed]

- Lopez L, Saurers DL, Barker PCA, et al. Guidelines for Performing a Comprehensive Pediatric Transthoracic Echocardiogram: Recommendations From the American Society of Echocardiography. J Am Soc Echocardiogr 2024;37:119-70. [Crossref] [PubMed]

- Sachdeva R, Armstrong AK, Arnaout R, et al. Novel Techniques in Imaging Congenital Heart Disease: JACC Scientific Statement. J Am Coll Cardiol 2024;83:63-81. [Crossref] [PubMed]

- Balestrini L, Fleishman C, Lanzoni L, et al. Real-time 3-dimensional echocardiography evaluation of congenital heart disease. J Am Soc Echocardiogr 2000;13:171-6. [Crossref] [PubMed]

- Colen T, Smallhorn JF. Three-dimensional echocardiography for the assessment of atrioventricular valves in congenital heart disease: past, present and future. Semin Thorac Cardiovasc Surg Pediatr Card Surg Annu 2015;18:62-71. [Crossref] [PubMed]

- Behera SK, Ding VY, Chung S, et al. Impact of Fetal Echocardiography Comprehensiveness on Diagnostic Accuracy. J Am Soc Echocardiogr 2022;35:752-761.e11. [Crossref] [PubMed]

- Friedberg MK, Silverman NH, Moon-Grady AJ, et al. Prenatal detection of congenital heart disease. J Pediatr 2009;155:26-31, 31.e1.

- Narula S, Shameer K, Salem Omar AM, et al. Machine-Learning Algorithms to Automate Morphological and Functional Assessments in 2D Echocardiography. J Am Coll Cardiol 2016;68:2287-95. [Crossref] [PubMed]

- Khamis H, Zurakhov G, Azar V, et al. Automatic apical view classification of echocardiograms using a discriminative learning dictionary. Med Image Anal 2017;36:15-21. [Crossref] [PubMed]

- Bridge CP, Ioannou C, Noble JA. Automated annotation and quantitative description of ultrasound videos of the fetal heart. Med Image Anal 2017;36:147-61. [Crossref] [PubMed]

- Huang W, Bridge CP, Noble JA, et al., editors. Temporal HeartNet: Towards Human-Level Automatic Analysis of Fetal Cardiac Screening Video. In: Descoteaux M, Maier-Hein L, Franz A, et al. editors. Medical Image Computing and Computer-Assisted Intervention − MICCAI 2017. MICCAI 2017. Lecture Notes in Computer Science, vol 10434. Cham: Springer International Publishing; 2017.

- Yu L, Guo Y, Wang Y, et al. Segmentation of Fetal Left Ventricle in Echocardiographic Sequences Based on Dynamic Convolutional Neural Networks. IEEE Trans Biomed Eng 2017;64:1886-95. [Crossref] [PubMed]

- Xu L, Liu M, Shen Z, et al. DW-Net: A cascaded convolutional neural network for apical four-chamber view segmentation in fetal echocardiography. Comput Med Imaging Graph 2020;80:101690. [Crossref] [PubMed]

- Dozen A, Komatsu M, Sakai A, et al. Image Segmentation of the Ventricular Septum in Fetal Cardiac Ultrasound Videos Based on Deep Learning Using Time-Series Information. Biomolecules 2020;10:1526. [Crossref] [PubMed]

- Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab N, Hornegger J, Wells W, et al. editors. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. MICCAI 2015. Lecture Notes in Computer Science(), vol 9351. Cham: Springer International Publishing; 2015.

- Qiao S, Pang S, Luo G, et al. FLDS: An Intelligent Feature Learning Detection System for Visualizing Medical Images Supporting Fetal Four-Chamber Views. IEEE J Biomed Health Inform 2022;26:4814-25. [Crossref] [PubMed]

- Lu Y, Li K, Pu B, et al. A YOLOX-Based Deep Instance Segmentation Neural Network for Cardiac Anatomical Structures in Fetal Ultrasound Images. IEEE/ACM Trans Comput Biol Bioinform 2024;21:1007-18. [Crossref] [PubMed]